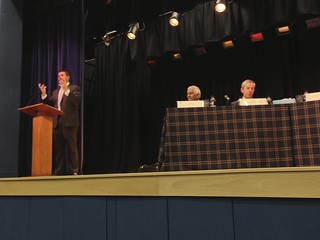

"On the morning of Saturday 14 September, the University hosted three open forum discussions (Masterminds) to debate pressing contemporary issues and concerns for the future. Contributors came from a range of disciplines, representing both the Arts and Sciences, and the University drew on internal strengths and external expertise to address each topic". I spoke in a session entitled: The World our Grandchildren will Inherit: Global Health, Sustainability and Next Generation Technology.

The Chair was Professor Candace Currie OBE from the School of Medicine at the University of St Andrews and the speakers included.

The Chair was Professor Candace Currie OBE from the School of Medicine at the University of St Andrews and the speakers included.

- Professor Sissela Bok, Senior Visiting Fellow at the Harvard Centre for Population and Development Studies at Harvard University

- Professor Stephen Gillespie, Sir James Black Chair of Medicine and Director of Research at the University of St Andrews

- The Rt Hon The Lord Patel KT MBChB (St Andrews) FMedSci FRSE FRCOG, Chancellor of the University of Dundee, member of the House of Lords and a key contributor to the field of high risk obstetrics

- Professor Aaron Quigley, the Chair for Human Computer Interaction at the University of St Andrews’ School of Computer Science

My speech was as follows:

Today, 600 years after this University was founded, let us take a moment to think about where we have come from, where we are today and the world our grandchildren will inherit.

Since the founding of this University the world's population has grown approximately 20 fold, life expectancies have tripled and use of technology has become evermore interwoven into everyday life. However, average life expectancies vary by 40 years across the world, access to basic health care varies dramatically, our use of resources is markedly different as is our access to technology to support our work and life. Of course, there are too many technologies to talk about today so I'd like to focus on digital technologies and computing and the potential for humanity and the billions of people on earth.

The world of 1413 relied on technology but not as we do today. It would be another 200 years before an English Poet would use the word computer to describe the job of tabulating numbers. While at the same time one of our graduates John Napier was proposing the use of logarithms. Within half a century of logarithms we see the introduction of the slide rule as a calculation aid or what some might call a mechanical analog computer. This device remained in use long after its introduction. 350 years later slide rules were carried on the five Apollo space missions to the moon. Through the first 5 centuries of the University, we see the introduction of increasingly sophisticated mechanical calculators, designs for a difference engine by Babbage and tabulation devices. Later still, came devices which could be programmed, such as the Jacquard looms. By 1890, an electric tabulating system with punch cards was used to rapidly tabulate the US census.

Of course, it's in the past 70 years that we have seen the introduction and advancement of the electronic digital computer. These can be programmed to solve more than one kind of problem and in their most basic form consist of memory, long term storage, processing, an instruction set and input and output.

Our use of the digital computer has undergone dramatic change during this time. In the beginning we had each computer being used by many people primarily in government, industry or academia. Whereas today, we are tending towards many computers or processing elements per person. Everything from the home computer, tablet, smart phone to the embedded devices in your car or washing machine. The past three decades have seen computers enter our day to day life and in parallel become essential to the services, infrastructure and support of our lives.

During the course of this period we have had researchers and visionaries considering the future of next generation technology. Can we augment human intellect, can access to information be as natural as our own thinking process, what happens when computing and communications are ubiquitous. As Mark Weiser said, "The most profound technologies are those that disappear. They weave themselves into the fabric of everyday life until they are indistinguishable from it".

It is difficult for anyone to predict the future. We need only look to our past, to see how amusingly wide of the mark or occasionally prescient people have been. Picture how a townsperson from St Andrews of 1413 might look at the world of today? What would they find unusual, strange, inexplicable or magical? As Arthur C. Clarke said "Any sufficiently advanced technology is indistinguishable from magic". Would our electricity, airplanes, computers and gestural interfaces appear magical?

As a form of technology we have come to rely on, digital technologies, computing and communications are unlike other technologies that have gone before them, due to three important characteristics, namely data, programming and connectivity.

Digital technologies can produce and consume vast stores of data, information and context about us, our environment and the world at large. Such instant access to information will continue to change our life. We can see how the use of data is changing the study of medicine, our planning for health systems, science and engineering. There are two areas of data use related to global health and sustainability I'd like to discuss.

Firstly, the "Internet of Things" or the "Nervous system for the planet". This is where any powered device is connected to a network. This gives rise to new devices, sensors and services. Through monitoring, large power savings with simple sensors distributed through the home can be achieved. Expecting our homes and infrastructure to aid us in improving our energy or resource use will in time appear obvious, unremarkable and simply "how things should be" as we make steps to more sustainable living.

Next, Personal informatics is a large and growing area of study. You collect data that is personally relevant to you for the purpose of self-reflection and self-monitoring. Using this data you can gain knowledge about your behaviors, habits, and thoughts. Health is often a driving force for people acquiring an interest in personal informatics. The simple collection of data can allow people to monitor their movements during the day or their exercise levels versus their goals. In each case the ability to capture and reflect on this helping people make changes in their behaviors.

The second differentiating factor with digital technology, is programming. This helps process information in new ways. In the past we relied on our analog devices to tabulate numbers. Today, we have the ability to construct hardware and software systems to allow us to offload cognitive effort into computational effort.

In the near term, contextual computing will ensure our digital technologies know more about us and personalize accordingly. This context includes our location, identity, who we are with, our user model, environmental, social and resource knowledge, along with our activity, schedule, agenda and even our physiological data. All of this can be used to change how applications support us in our day to day life. Imagine the nurse, whose schedule, location and activity can be cross referenced with the patient she is attending to, to help avoid mistakes. Or the surgeon involved in a difficult operation who is drawing upon external guidance or notes. Or even improving how people operate in hospitals by tracking where every wheelchair, infusion pump or resource is. Our ability to program devices allows us to form and reform them.

In the near term, contextual computing will ensure our digital technologies know more about us and personalize accordingly. This context includes our location, identity, who we are with, our user model, environmental, social and resource knowledge, along with our activity, schedule, agenda and even our physiological data. All of this can be used to change how applications support us in our day to day life. Imagine the nurse, whose schedule, location and activity can be cross referenced with the patient she is attending to, to help avoid mistakes. Or the surgeon involved in a difficult operation who is drawing upon external guidance or notes. Or even improving how people operate in hospitals by tracking where every wheelchair, infusion pump or resource is. Our ability to program devices allows us to form and reform them.The third differentiating factor is connectivity, or the ability to interconnect devices and hence people. This connectivity has a long history but it's our use of it to connect every device and interconnect every person where the true power of digital technology emerges from. About 60 years before St Andrews was founded the black death swept across Europe, wiping out nearly half of our population. Today we can ask does our ability to communicate allow for the identification of new pandemics. Or does it allow for the rapid dissemination of information to mitigate the next black death? It is already showing how remote medical treatments become possible, new types of group formation to solve social problems emerge, or overcoming the problems of social isolation, or even allowing groups of connected citizens or scientists to rapidly emerge. By connecting people we can connect and extend our thinking.

Data, programming and connectivity are already impacting the world our grandchildren will inherit. However, what about future generations?

I think the next generation technologies which will have the greatest impact concern the bandwidth to the brain. By this I don’t mean brain computer interfaces. Instead I mean the use of technology to enhance our senses, augment our intellect, draw on the collective cognitive efforts of many, or simply improve our experience of being alive.

I see the world our grandchildren will inherit as connecting our digital and physical worlds evermore closely. Not in some cyborg dystopian future but simply the next generation of the eye glass or contact lens, the use of touch with braille to read, pacemakers or cochlear implants.

Researchers, Engineers and Scientists around the world are looking at ways to enhance our senses for a myriad of reasons from correction to replacement.

Let us consider three of our senses. Namely, touch, hearing and sight.

Our sense of touch relies on a sensory system spread across and through our body and involves a large portion of the human brain to process and understand. It's related to our ability to sense pain, temperature or proprioception. Let’s take two real examples of why we might need to digitally augment this sense. First, lets consider the eye surgeon. A common eye operation is cataract removal due to clouding of the lens. The eye is numbed, cuts are made, tissue lifted and a pair of forceps are used to create a circular hole in the bag in which the lens sits. A probe is used to break up and emulsify the lens using ultrasound waves. Once complete a plastic foldable lens is inserted. This is a quick operation but witnessing this myself for the first time felt like an age. The fine motor control, ability to sense pressure or see flesh moving means the tools for training are not taking full advantage of haptic interfaces or other means to deliver an augmented sense of touch. Indeed, those training to undertake this surgery practice peeling the skin off a grape with forceps to train and emulate the experience.

Our sense of touch relies on a sensory system spread across and through our body and involves a large portion of the human brain to process and understand. It's related to our ability to sense pain, temperature or proprioception. Let’s take two real examples of why we might need to digitally augment this sense. First, lets consider the eye surgeon. A common eye operation is cataract removal due to clouding of the lens. The eye is numbed, cuts are made, tissue lifted and a pair of forceps are used to create a circular hole in the bag in which the lens sits. A probe is used to break up and emulsify the lens using ultrasound waves. Once complete a plastic foldable lens is inserted. This is a quick operation but witnessing this myself for the first time felt like an age. The fine motor control, ability to sense pressure or see flesh moving means the tools for training are not taking full advantage of haptic interfaces or other means to deliver an augmented sense of touch. Indeed, those training to undertake this surgery practice peeling the skin off a grape with forceps to train and emulate the experience.A second example. Consider how you, your child or your grandchild have learnt to write. Has it changed in the last 10, 50 or 100 years? The handwriting we use today is rooted in the scripts associated with the Italian Renaissance. 300 years ago John Clark, an English writing master expected his student to get ‘an exact notion, or idea of a good letter by frequent and nice observation of a correct copy’ with imitation. Does that sound very different to how you learnt to write or how your grandchildren are learning? Of course, new teaching methods have improved how we instruct children to write but is there anything which helps them understand how to hold the pen, the pressure to apply, the movements to make. We have relied on sight and hearing to teach but not allowing them to feel the movement you are trying to explain. Here in St Andrews we are studying how we might sense how someone is writing from an instrumented pen. The motion, the pressure, the timing, the posture, following the lines, joining shapes, flow, the angles and hence trying to feedback information to the learner in subtle, meaningful and helpful ways. Our goal is to try and help steer ones learning and experience down the path to fluid and beautiful writing and away from the painful and illegible.

It’s clear that augmenting our sense of touch opens up new opportunities.

Our sense of hearing is often used to overcome the digital physical divide and for augmentation. Consider the Australian cochlear implant which is a surgically implanted electronic device that provides a sense of sound to a person who is profoundly deaf or severely hard of hearing. Implanted into over a quarter of a million people it is a form of augmentation. You might think no one would be against this as it addresses a profound need but some are. The implantation of children with these devices has been described as "cultural genocide". For me, this is a computer, in every aspect I described before and it opens up the discussion of what other types of audio augmentation and enhancement might become commonplace and useful. Ambient alerts, navigation cues or the ability to listen to a language you do not understand and have it automatically translated in real time.

Finally, our sight, in many ways our primary sense. We know a large portion of the human brain is involved with our vision. Today when you look at much of our interaction with digital technologies it is in an eyes down manner. Take the time to look at those around you when next on a train or bus. Are they looking up and out at the world or are they transfixed with their little “window of interaction” with the digital world. Our ability to augment human vision has immediate medical needs for those who loose sight due to an accident for example. Researchers across the world are exploring the potential for the bionic eye. In general, a camera captures and sends images via a processor to a chip implanted under the skull. This chip stimulates the visual cortex via electrodes, allowing the brain to interpret the images. Such eyes are aimed at replacing lost vision but it’s clear both captured video and digital data will be blended together. How we might use head worn wearable displays is an area today of active industrial interest with wearable computing.

Here in St Andrews we have researchers looking to model the eye to understand how we see images, others looking at ways to measure distance to the display and hence adapting images to improve legibility or information awareness. We can imagine many other examples of displays that help health workers while their hands are busy, those navigating or people simply exploring a new town. Again, with sufficient processing we can imagine the universal translation scenario where you look at a sign in a foreign language and automatically see a translation, if you wish.

I’m sure some of these ideas will seem obvious or simple “the way the world should be” while others may be confronting. We should not shy away for ideas which challenge us, instead, we should debate and explore them.

I hope that in 600 years time, academics like myself and my colleagues, will be asking the questions society haven’t yet thought to ask to help further human knowledge for all our benefit.